TL;DR: Docker isn’t just a “devops” buzzword, it’s a lifeline for those of us building, learning, and seeking to harness machine learning (ML) in real-world businesses. From reproducibility to smoother onboarding, Docker has defined how I view the intersection of code and AI. Here’s how.

I remember the moment I first discovered Docker as an IT consultant. It started out as someone else’s tool—one more bit of jargon on client calls (“Can you spin it up in a container?”). It felt distant then. But with my transition towards AI and machine learning consulting, Docker swiftly moved from “nice to know” to “absolutely must know.”

If you’re coming from a traditional IT background like me, you’re used to managing servers, wrangling software dependencies, and the perennial struggle of “it works on my machine!” Docker wasn’t built for ML, but learning it felt like I’d found the missing link between my sysadmin instincts and my passion for AI.

What is Docker, Really?

At its core, Docker is a tool that lets you package code, runtime, libraries, and dependencies into something called a container. Think of a container as a self-contained box—where everything your program needs to run travels with it, no matter where you send it.

Why does this matter in machine learning? Well, anyone who has tried to build an LLM (Large Language Model) or even a simple neural net will know the pain of conflicting library versions, CUDA nightmares, or dependencies that only work on a teammate’s machine. Docker ends these migraines.

Three Reasons I Can’t Imagine ML Without Docker

1. Reproducibility

ML models are finicky. Your results depend on so many moving parts: data, code, OS, GPU drivers, and specific library versions (Pytorch 1.10 versus 1.13, anyone?). Docker lets you snapshot everything—meaning I can send a container to you, your analyst, or deploy it on a cloud server and it will work, every single time.

2. Collaboration

When working with data scientists, ops engineers, or stakeholders, Docker bridges the “works-for-me” gap. We share Docker images, and there are no more frantic “did you pip install the right version?” exchanges. Dockerfiles can be committed to code repos, making our workflow visible and transferable.

3. Faster Experimentation

Want to try an LLM or the latest research repo? Most model authors publish their environments as Docker images. Instead of hours setting up, you: pull → run → play. You can snapshot containers, roll back, and experiment freely—without fear of breaking your real machine.

Installing Docker on Mac (My Step-by-Step)

It’s surprisingly simple. Here’s how I set it up:

- Download Docker Desktop:

Go to Docker’s official website and download Docker Desktop for Mac. - Install:

Double-click the.dmgfile and drag Docker to your Applications folder. - Launch Docker:

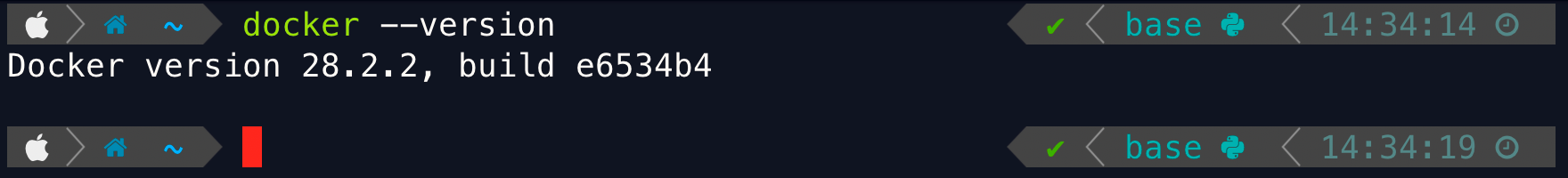

Open “Docker” from your Applications. You’ll see the whale icon in your status bar. - Test in Terminal:

Open Terminal and type: 'docker --version' If you get a version response, you’re set!

Running a Large Language Model (LLM) Directly with Docker

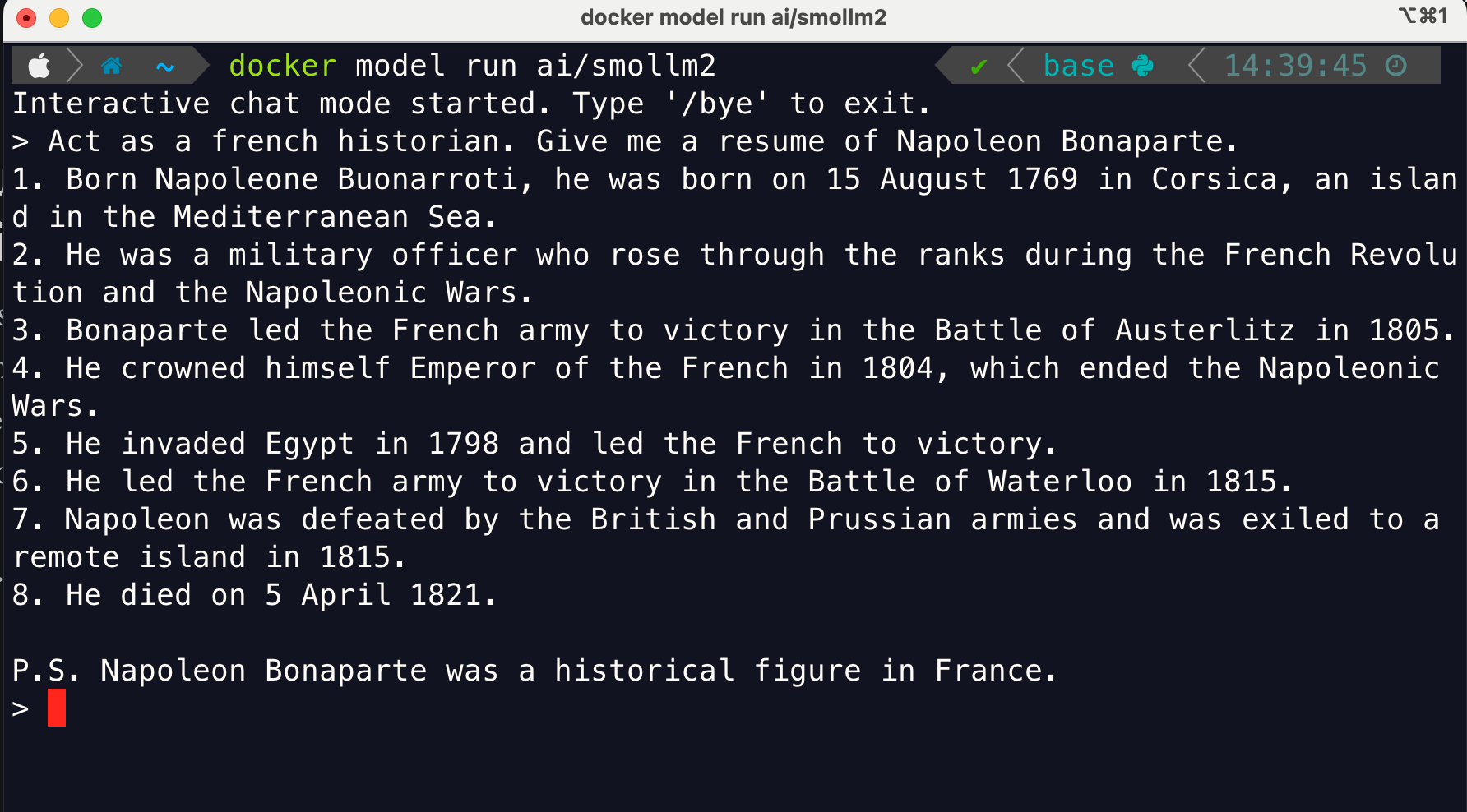

This blew my mind—how simple it could be to run powerful models with a single command. Here’s my go-to way to get started running LLM models, and this will greatly depend on the available computing power that you have. However, this model 'smollm2' is in fact small enough to run in a recent computer.

$ docker model run ai/smollm2

There you have it, a LLM model running in your computer, isn't it great!

Why Self-Host an LLM Instead of Using Only Cloud APIs?

While hosted LLM APIs (like OpenAI) offer out-of-the-box convenience, self-hosting a large language model on your own infrastructure can deliver major benefits—especially for businesses with a forward-thinking AI strategy:

1. Enhanced Data Privacy and Security

Data never leaves your environment. Sensitive customer information or proprietary insights are processed in-house, meeting compliance, regulatory, or client-imposed requirements.

2. Cost Predictability and Control

Cloud LLM APIs can get expensive fast—especially with high query volumes. Hosting your own model converts unknown, metered costs into predictable hardware and maintenance investments, giving you clearer budget expectations long-term.

3. Customization & Extensibility

Self-hosting gives teams full control: tune the model for your domain, integrate it tightly with other on-premises systems, or even fine-tune on your proprietary datasets. This kind of flexibility is rarely available with black-box API services.

4. No Vendor Lock-in & Business Continuity

You retain complete control over your LLM tools, architecture, and roadmap. No worries about sudden API changes, price hikes, or shifting third-party policies.

Next Steps: Explore Ollama and Self-Hosting LLMs

For readers eager to get practical and experiment with self-hosted LLMs, I encourage you to check out Ollama . Ollama offers an amazingly simple way to run and interact with open-source large language models locally—on your laptop or your own server.

It leverages Docker for consistent, isolated environments, letting you test-drive LLMs with very little setup.

How to Get Started:

- Install Ollama:

Simply visit Ollama’s install page . In this page, you can download the Mac app of Ollama, bu you can run it through Docker as well. - Pull a Model and Ask Questions:

$ ollama run llama2

Or try:

$ ollama run mistral

Within minutes you’ll have a conversational LLM accessible on your Mac, ready to be integrated, experimented with, and imagined for your business’s unique use cases.

Reflecting Forward

If your business is ready to move beyond the limits of external APIs and truly own its AI strategy, self-hosting LLMs offers security, cost control, and deep customization—right within your walls.

Whether you want help evaluating your infrastructure for AI, need guidance running Ollama or Dockerized LLMs, or want to plan a full deployment, I’m here to help.

For readers starting your own journey, diving into tools like Ollama is a perfect “next step” to make these possibilities tangible.

Let’s connect. Whether you’re a leader looking to empower your business, or a technologist eager to experiment with real, local large language models— drop me a message. I’ll help you bridge the gap between AI exploration and real, operational value.

Let me know how Docker transformed your AI journey, or if you’re just getting started—reach out! I’m still learning too.

Member discussion